Latest Posts

Making things elastic.

cloudformation

Overview

AWS and the cloud in general ( in whatever form that takes ) is all about being elastic. This elastic property is what can ultimately save us money and make our lives easier.

At one point many moons ago, I had a discussion with a collogue regarding the cost of a data center versus the cost of the cloud. His argument that the cloud ends up costing more than a data center. His perspective and experience made this understandable. From his perspective the cloud was big, stupid and expensive. My experience has been similar in that I've seen enterprise companies do really stupid things over a protracting time, which ends up costing them lots of money.

In this post I'm going to explore one of many ways in which we can change this dynamic and help people utilize the real power of the cloud. We do this by working the elastic aspect of our compute resources. Specifically we're going to work around our development areas. This could apply to our other higher environments, but for now let's just stick with a very specific use case that involve developer pipelines in our CI/CD process. In a nut shell we want to keep our compute costs down while still allowing our developers to iterate quickly. We do this with AutoScaleGroups ( ASG ) and CloudWatch Trigers ( CW, CWT ).

We start this journey by looking at how developers can use the ElasticContainerService ( ECS ) in AWS. Our wokflow would look like this for a typical deployment in our CI/CD pipeline.

- aws ecr get-login have docker login to ECR

- Push the docker container to ECR with docker push

- Use the aws cli to create a new task def.

- Update the currently running service with the new task def id.

In the previous post I demonstrated how to create an ECS cluster using an ASG. The ASG started with min: 1, max: 1, desired: 1 this is so that we can keep the expenses of the dev environment low. However, keeping the profile low causes a problem at this point in the workflow. When a new task is created and the service is updated the cluster will effectively be trying to schedule two tasks of similar configurations on the same cluster. This is how this might play out on a cluster using a simple t1.micro which has a total of 2048 CPU and 2000 RAM. Each application task def is designed to take exactly have of the resources, so 1024 CPU and 1000 RAM per task.

| CPU Availiable | CPU Reserved | RAM Avaliable | RAM Reserved | |

|---|---|---|---|---|

| Inital state ( no apps deployed ) | 2048 | 0 | 2000 | 0 |

| First version of application is deployed | 1024 | 1024 | 1000 | 1000 |

| Second version of application is deployed | 0 | 2048 | 0 | 2000 |

- When we first schedule a task on the cluster we put the cluster we end up allocating exactly 50% of the cluster.

- When we do our first deployment we end up having two tasks on the cluster, which means the cluster is now 100% utilized.

- The first task def will eventually bleed out and deallocate itself from the cluster leaving the cluster at 50% capacity.

This is where we can get into trouble. If we only have one task on the cluster and we're only doing one deployment at a time, then we should be fine here. However, that's highly unlikely in a world of microservices. In most cases we're going to have at least 3, probably more, maybe even as many as 10 services running on this cluster. We could have things like redis, or even our database running on the cluster. In some cases running the db right on the cluster can make our iterations faster.

At this point, if we have more than two services we'll end up with a completely consumed cluster after our first deployment. This is where the power of the ASG and CWT can come in. We're going to implement a simple trigger that is going to expand the cluster out quickly, then slowly bring it back to one over the course of a few hours.

Use case

The user story that we're solving here is from the perspective of a developer coming into work on any given morning. Or, if you're in the valley, maybe closer to early afternoon.

Our development team might even have CI/CD pipelines running builds early in the morning on master or a release branch as a matter of automation like a nightly build, but done early in the morning to take advantage of this particular functionality. The build fires off, either by automation or by someone doing their first build of the day. The cluster ends up getting maxed out because it's wildly under provisioned at 1/4/1.

The CWT can detect that the cluster is over 75% reservation and automatically fire up +5 more instances. At least, that's the request, but since we've set our max=4 on the ASG, only 3 instances will be spun up. This is in order to keep an absolute ceiling on the ASG. My setting of 4 is completely arbitrary, of course, you should use whatever setting you feel is proper for your layout.

Eventually the old tasks will bleed off and we'll be back to a <75% reservation. The CWT trigger waits until the cluster is under 50% reservation, then it will start taking 1 instance down per hour as long as the reservation is under 50% during that time. If, at any point the reservation goes above the 75% threshold, the ASG will try to keep the desired count at the max, or whatever the calculation is based on the trigger.

Basically we're liberal about giving compute instances to developers that need the resources, but conservative about taking them away. Once the resources are no longer needed, the ASG will scale back down to it's original setting of 1/4/1 until the next morning.

This allows us to actually be elastic about our compute resources, but more importantly we're using a system that is constantly giving us fresh compute resources and rolling out old ones. This means that it's nearly impossible to maintain any one-off hacks on the instances.

In some cases developers might find this to be an insufferable pain in the ass because it completely blocks them from being able to do any custom things on the compute resources. We, of course, appreciate their concern, but ultimatly should help them find a better way of customizing their environment. </b>

Let's do this!!

Along with the CWT I'm also going to tie into something that my framework uses called the modular composition thingie. It's basically a way to shorthand big things in my yaml config. My plan here is to use this system to implement a modular way of plugging this pattern into any and all ASG's in a template.

This is the first part of my configuration file for the workout tracker:

---

name: "WorkoutTracker"

owner: "Bryan Kroger (bryan.kroger@gmail.com)"

template: "ecs"

description: "Simple workout tracker application using ECS."

modules:

- "base"

- "vpc"I'm going to add something to the modules list here called cwt then add some bits that allow me to tune my params for this module. This is my new config.

---

name: "WorkoutTracker"

owner: "Bryan Kroger (bryan.kroger@gmail.com)"

template: "ecs"

description: "Simple workout tracker application using ECS."

modules:

- "base"

- "vpc"

- "ecs-cwt-capacity"My modular system works by loading up a module in the templates directory, then doing a deep merge with the content of the current template data structure. This allows me to express smaller, more modular chunks of code and just reuse each piece as needed. I have modules that handle all kinds of things. I can even integrate chef by simply adding a line to the modules system. Or, I can leave it out and do something custom like I'm doing here with the ECS stack.

Now when I create my stack I end up with a bunch of cloud watch alarms attached to the cluster. stack.json params.json

Wrap up

There's actually a big flaw in this design in that I'm hard coding the Ref to the ASG in the module. I plan on breaking out my modular system into a more versatile system that can handle these conditions better with a little extra code. Much like the params system. That'll be the next version. So far, I haven't had a huge need to do something like this, but this seems like the perfect use case for doing the work.

Read More →Creating a simple application using the workout tracker.

cloudformation

Overview

The workout tracker program is a super dead simple little golang app that I've been working on over the past year or so. It started out with a simple design as a way to help me keep track of my workouts. Easy enough, right? Well, it turns out that the golang stuff was actually a bit of a flop. I ended up solving the problem using google sheets and a little ruby code.

However, what I learned during this process is that my little go binary was super good at doing one very simple thing in that I could wrap up the bin into a docker container and use it to do many POC things with AWS ECS. In fact, I used this little fella extensively at a client to show how ECS deployments worked.

This was the workflow for demonstrating how we could do b/g deployments in ECS:

- Tune Makefile in project to reflect new version. For example we might change "0.4.0" to "0.4.1".

- make build will create a new binary which includes the new version string.

- make docker_image will run docker build, which creates a new docker image with the new binary.

- make push tags and pushes the docker image to ECR

- Then we had a little code that would create a new task def, then update the service with the new task def ID

- That would cause a deployment to happen in ECS for our service

- While all of this is going on we would be: curl http://iambatman.client.com/version every second which returns a json string with the version info.

The output would look something like this:

{ "version": "0.4.0" }

{ "version": "0.4.0" }

{ "version": "0.4.0" }

{ "version": "0.4.1" }

{ "version": "0.4.0" }

{ "version": "0.4.1" }

{ "version": "0.4.0" }

{ "version": "0.4.1" }

{ "version": "0.4.1" }

{ "version": "0.4.1" }

{ "version": "0.4.1" }

{ "version": "0.4.1" }

{ "version": "0.4.1" }Both versions are running until the new version is completely switched over and all traffic has switched over to the new task. ECS, like most systems like this, has free b/g deployments. Pretty neat, huh?

The intent with this post is to show how to create a dead simple container like this that can deploy a simple application that does a simple thing. Simply.

Design

Let's start this process by laying out what we want to accomplish. For now, I'm going to skip the DNS integration. I might do that in a later post as to not confuse the simplicity of this project.

- Create a load balancer that can distribute traffic between application resources.

- Deploy compute resources for the cluster.

- Use ECS to deploy a containerized application on the cluster.

- Service should expose /version on the ALB DNS end point.

The devops project contains everything I need to launch a stack that does exactly this. This is something that I've been putting together for years based on my work with many different clients representing many different business models. Here's how I launch my stack:

krogebry@ubuntu-secure:~/dev/devops/aws$ rake cf:launch['workout-tracker, 0.0.8, WorkoutTracker']

D, [2017-08-08T15:32:38.329970 #3245] DEBUG -- : FS(profile_file): cloudformation/profiles/workout-tracker.yml

D, [2017-08-08T15:32:38.970751 #3245] DEBUG -- : MgtCidr - {"type"=>"vpc_cidr", "tags"=>{"Name"=>"main"}}

D, [2017-08-08T15:32:38.971575 #3245] DEBUG -- : KeyName - devops-1

D, [2017-08-08T15:32:38.972031 #3245] DEBUG -- : DockerImageName - {"type"=>"docker_image_name", "image_name"=>"workout-tracker"}

D, [2017-08-08T15:32:38.972579 #3245] DEBUG -- : VpcId - {"type"=>"vpc", "tags"=>{"Name"=>"main"}}

D, [2017-08-08T15:32:38.973277 #3245] DEBUG -- : Zones - {"type"=>"zones", "tags"=>[{"Name"=>"public"}, {"Name"=>"private"}]}

D, [2017-08-08T15:32:38.974151 #3245] DEBUG -- : Subnets - {"type"=>"subnets", "tags"=>[{"Name"=>"public"}, {"Name"=>"private"}]}

D, [2017-08-08T15:32:38.977673 #3245] DEBUG -- : Loading module: cloudformation/templates/modules/base.json

D, [2017-08-08T15:32:38.981519 #3245] DEBUG -- : Loading module: cloudformation/templates/modules/vpc.json

D, [2017-08-08T15:32:39.093917 #3245] DEBUG -- : Stack exists(WorkoutTracker-0-0-8): false

D, [2017-08-08T15:32:39.094576 #3245] DEBUG -- : Creating stackMost of this is debugging info that I use to keep track of what's going on. The most important part here is the rake command, which consists of 4 parts:

- cf:launch this is the rake target which contains the code I'm about execute

- workout-tracker this is the profile yaml file I'm going to use to get things going. This config file contains the template I'm going to use.

- 0.0.8 version of the stack. I use this to increase the velocity of my designs. I don't have to wait for a stack to delete before creating a new one. This also allows me to implement b/g at the infrastructure level. Very handy.

- WorkoutTracker the name of the CF stack.

The name of the CF stack ends up being WorkoutTracker-0-0-8. Now I can use the version as a sort of "point in time" reference for where I'm at with the infrastructure code. This is the most difficult way of producing CF stacks because it's forcing people to think of infrastructure as a gathering of versioned things, which is different than what we're used to, which is something that lives in reality as a 1u component in a rack. I personally prefer this model because I can create things faster and better here. However, not everyone agrees with me on this one.

This does have it's draw backs, of course. However, in one particular case called the blast radius argument, it's very easy to see how this would be better than traditional "non-versioned" layouts. An example that demonstrates this would be how someone might argue that we should breakout the security groups for a given stack into their own stack. This is fine, but the problem is that if they are broken out, and multiple services are using a single SG, then if someone changes that SG, then everything that touches that SG is exposed. This is a very short example of how one change can have a "blasting" effect which "radiates" to more than we intend to. Keeping the SGs inside the stack ensures that if something is changed, then the only thing impacted by that change is the thing that is contained within the stack.

Implementation

I've used my code to create a stack, now we can start investigating what has been created. First off I want to test to see if my ALB is working as I intend.

krogebry@ubuntu-secure:~/dev/workout_tracker$ curl Worko-EcsAL-W72TLYSV79IS-1289125696.us-east-1.elb.amazonaws.com/version

{"version":"0.5.0","build_time":"2017-08-08T13:52:02-0700","hostname":""}We can see here that the ALB end point is working from my workstation here at home.

The stack and params file are available for this demo. Here's a detailed list of what we're creating here.

- ASG that spins up a single EC2 instance.

- Security group for the EC2 instances.

- ALB and TargetGroup.

- Security group for the ALB entry point ( 0.0.0.0/0:80 )

- IAM roles for the EC2 instances as well as the ECS service.

- ECS service and task def.

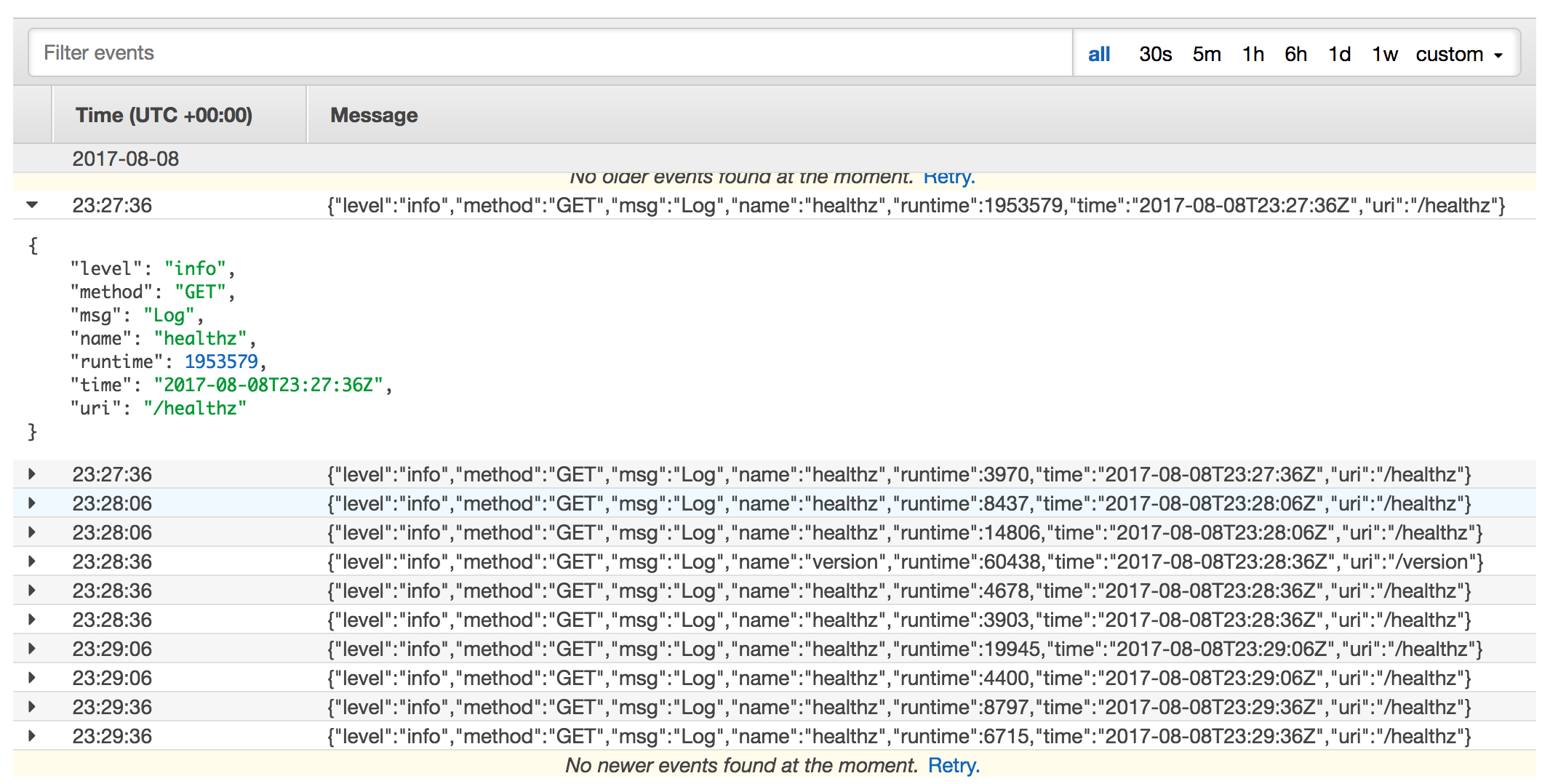

The workout tracker implements JSON logging output, which is being sent through ECS to cloudwatch logs. This doesn't do me any good at the moment, but having json logs is just good stuff all around.

The NetworkDoctor

cloudtrail

The idea with the netdoc was to create a way to check a few important aspects regarding how a vpc and subsequent subnets+route tables are setup.

User story

The need for this netdoc comes about from the following story. To set this up, let's first define our subnet layout as such.

- VPC ( tag:Name,value:dev )

- Subnets:

- lbs: Public-facing load balancers.

- routing: [E,A]LB's from lbs route to here and here only. This is where we'll have our Nginx clusters doing URI routing.

- application_lbs: Application level [E,A]lbs

- applications: Applications, like EC2 instances for our ECS clusters.

- db_conn: Subnets for connecting RDS/DynamoDB

- cache: Subnets for our caching layer like memc or redis

- monitoring: Any time of monitoring or logging agents, like splunk forwarders or promethus rigs

- secdef: Security and defense applications.

- bastion: Jump boxes

That's 9 subnets. And yes, this is very close to a legit, no bullshit, enterprise layout that people often try to employ. A reasonable person might suggest that you wouldn't need this many subnets if you understand the nature of security groups. I agree, however, it is often the case that logic and reason fail to compare to the power of the emotional side which usually drives these decisions. People often prefer to think they're safe, even if that means wildly over designed things.

It could also be argued that the over complexity of something like this leads to dangerous overtaxing of human based cognitive resources while we meat sacks attempt to debug problems. I guess that's why I started doing these projects; it's easier to run a script to tell me if something is wrong with your Rube Goldberg network layout.

At one point we were working on a client which insisted that we automate everything. And I do mean everything, from the key pair creation to the vpcs and everything else. That's an ambitious goal for something that wasn't even close to limping its way out of the dev space. At any rate, as you could imagine, managing the level of complexity became an almost daily chore. The people managing the system would often make changes to things in production to "just get things working" rather than submitting bugs and following the procedures. At one point we were troubleshooting a collection of problems with the dev account regarding traffic flow. The problem that we faced most often was not being able to tell at a glance if the subnets were able to talk to each other, and more specifically talk to their respective igw/nat chains.

My thinking here is to be able to create something that can work with the TT system and help me grade a vpc/subnet rig based on it's ability to route and maybe a few other factors. This would be similar to the work down with awspec in that we're validating certain things about how aws resources are connected and used. However my thinking here is to create a rule in TT that can help us validate our assumptions.

It also might turn out that I end up breaking the netdoc off into its own little project that solves a very specific space.

Output

krogebry@ubuntu-secure:~/dev/tattletrail$ rake netdoc:check_vpc['main']

D, [2017-07-30T14:57:30.083456 #7526] DEBUG -- : Creating NetworkDoctor for main

D, [2017-07-30T14:57:30.426432 #7526] DEBUG -- : Checking vpc: main

D, [2017-07-30T14:57:30.426509 #7526] DEBUG -- : CacheKey: vpc_us-east-1_Name_main

D, [2017-07-30T14:57:30.426657 #7526] DEBUG -- : Checking subnets

D, [2017-07-30T14:57:30.426697 #7526] DEBUG -- : CacheKey: vpc_subnets_vpc-21d67f46

D, [2017-07-30T14:57:30.426855 #7526] DEBUG -- : CacheKey: vpc_main_route_vpc-21d67f46

D, [2017-07-30T14:57:30.426971 #7526] DEBUG -- : CacheKey: vpc_subnet_route_subnet-5441157e

I, [2017-07-30T14:57:30.427150 #7526] INFO -- : Subnet is routable

D, [2017-07-30T14:57:30.427213 #7526] DEBUG -- : CacheKey: vpc_subnet_route_subnet-816b3ad9

I, [2017-07-30T14:57:30.427443 #7526] INFO -- : Subnet is routable

D, [2017-07-30T14:57:30.427501 #7526] DEBUG -- : CacheKey: vpc_subnet_route_subnet-629bad5f

I, [2017-07-30T14:57:30.427652 #7526] INFO -- : Subnet is routable

D, [2017-07-30T14:57:30.427708 #7526] DEBUG -- : CacheKey: vpc_subnet_route_subnet-d2c5c1a4

I, [2017-07-30T14:57:30.427852 #7526] INFO -- : Subnet is routableCreating simple security group rules.

cloudtrail

Security group rules

Introduced a handler to high alert anyone who has changed a security group via the UI.

rule 'User opens TCP to world' do

match_all

threat_level :high

match 'eventName' do

equals 'AuthorizeSecurityGroupIngress'

end

performed 'by user' do

by :user

via :console

end

opens_cidr 'world' do

cidr '0.0.0.0/0'

end

endIn this example we would see a high alert if someone has opened all TCP/UDP to 0.0.0.0/0.

Here's another example of the idea. In this example we're excluding rules that pertain to :80 and :433. This rule also specifically targets actions that were performed via a cloudformation script.

This would catch anyone who has launched a CF stack which has an obvious security problem. In this case that might be something like :22 from 0.0.0.0/0 or basically any combination of ports that isn't :80 or :443 and is open to the world.

rule 'Cloudformation script opens TCP to world' do

match_all

threat_level :medium

match 'eventName' do

equals 'AuthorizeSecurityGroupIngress'

end

performed 'by user' do

by :user

via :cloudformation

end

opens_cidr 'world' do

cidr '0.0.0.0/0'

ignore_port 80

ignore_port 443

end

endMore rules files

This version also improves the rule ingestion in that we can now have many files in the ./rules/ dir.

rules = ""

Dir.glob(File.join('rules/*.rb')).each do |filename|

rules << File.read(filename)

endSlightly better output

D, [2017-07-30T12:48:04.220619 #6839] DEBUG -- : Creating match rule for world

I, [2017-07-30T12:48:04.223147 #6839] INFO -- : Cloudformation script opens TCP to world

I, [2017-07-30T12:48:04.223776 #6839] INFO -- : krogebry 2017-07-30 04:28:46 UTC

I, [2017-07-30T12:48:04.224130 #6839] INFO -- : Cloudformation script opens TCP to world

I, [2017-07-30T12:48:04.224355 #6839] INFO -- : krogebry 2017-07-30 04:28:46 UTCNext up

I seem to have a pattern of completely ignoring my "next up" section.

- Grading system which can produce A,B,C,D,Failing grades based on the execution of the rules.

- Better output reporting

- MFA type rules

- More work on gathering the data

Starting the tattle trail project

cloudtrail

https://github.com/krogebry/tattletrail

Kicking off this project with a basic DSL in ruby which allows me to create a few rules. I then evaluate these rules and create basic output that confirms I'm on the right path.

- Express simple rules which can be elvaluated in a ruby DSL manner.

- Output should contain the name of the rule that matched based on the rule and the threat level assigned o this rule

- Event data to see what's going on with the given rule.

I'm leaving out the obvious testing kit things. The performed action isn't very smart yet.

There are currently two rules at play here.

Delete commands from the UI

A high-level security alert is anyone deleting a thing from the UI by hand. This would indicate a user doing something manually outside of a scripted action. The userAgent field will contain a specific string indicating that this action was done via the UI console.

This would be an example of a rule that might not be needed in the lower accounts like dev or maybe even stage, but would be very relevant in production.

rule "Delete commands from the UI" do

match_all

threat_level :high

match 'eventName' do

starts_with 'Delete'

end

performed 'by user' do

by :user

via :console

end

endNew KeyPair was created by hand

This is another 'quick and dirty' rule that would indicate someone created a new keypair by hand via the UI.

rule 'User creates a new key pair' do

match_all

threat_level :high

match 'eventName' do

equals 'CreateKeyPair'

end

performed 'by user' do

by :user

via :console

end

endReport output

krogebry@ubuntu-secure:~/dev/tattletrail$ rake tt:report

D, [2017-07-29T18:16:26.258836 #3625] DEBUG -- : Creating base

D, [2017-07-29T18:16:26.258915 #3625] DEBUG -- : Starting reporter

D, [2017-07-29T18:16:26.259087 #3625] DEBUG -- : Creating rule: Delete commands from the UI

D, [2017-07-29T18:16:26.259173 #3625] DEBUG -- : Creating base

D, [2017-07-29T18:16:26.259217 #3625] DEBUG -- : Creating match rule for eventName

D, [2017-07-29T18:16:26.259315 #3625] DEBUG -- : Starting reporter

D, [2017-07-29T18:16:26.259378 #3625] DEBUG -- : Performed by: user

D, [2017-07-29T18:16:26.259410 #3625] DEBUG -- : Via: console

D, [2017-07-29T18:16:26.259533 #3625] DEBUG -- : Creating rule: User creates a new key pair

D, [2017-07-29T18:16:26.259622 #3625] DEBUG -- : Creating base

D, [2017-07-29T18:16:26.259709 #3625] DEBUG -- : Creating match rule for eventName

D, [2017-07-29T18:16:26.259782 #3625] DEBUG -- : Starting reporter

D, [2017-07-29T18:16:26.259845 #3625] DEBUG -- : Performed by: user

D, [2017-07-29T18:16:26.259932 #3625] DEBUG -- : Via: console

I, [2017-07-29T18:16:26.260846 #3625] INFO -- : Delete commands from the UI level high

{"eventVersion"=>"1.05",

"userIdentity"=>

{"type"=>"Root",

"principalId"=>"ACCOUNT_ID",

"arn"=>"arn:aws:iam::ACCOUNT_ID:root",

"accountId"=>"ACCOUNT_ID",

"accessKeyId"=>"ASIAJTO6NK5FCFGA7GDA",

"sessionContext"=>

{"attributes"=>

{"mfaAuthenticated"=>"true", "creationDate"=>"2017-07-30T00:15:11Z"}}},

"eventTime"=>"2017-07-30T00:16:14Z",

"eventSource"=>"ec2.amazonaws.com",

"eventName"=>"DeleteRouteTable",

"awsRegion"=>"us-east-1",

"sourceIPAddress"=>"SOURCE_IP",

"userAgent"=>"console.ec2.amazonaws.com",

"requestParameters"=>{"routeTableId"=>"rtb-b89e9dc0"},

"responseElements"=>{"_return"=>true},

"requestID"=>"a2e704e0-5968-4132-985c-73ddfa0e7e3b",

"eventID"=>"ded47154-0091-486b-94c1-d17b38216ebb",

"eventType"=>"AwsApiCall",

"recipientAccountId"=>"ACCOUNT_ID"}

I, [2017-07-29T18:16:26.264946 #3625] INFO -- : User creates a new key pair level high

{"eventVersion"=>"1.05",

"userIdentity"=>

{"type"=>"Root",

"principalId"=>"ACCOUNT_ID",

"arn"=>"arn:aws:iam::ACCOUNT_ID:root",

"accountId"=>"ACCOUNT_ID",

"accessKeyId"=>"ACCESS_KEY_ID",

"sessionContext"=>

{"attributes"=>

{"mfaAuthenticated"=>"true", "creationDate"=>"2017-07-25T20:07:17Z"}}},

"eventTime"=>"2017-07-25T20:12:03Z",

"eventSource"=>"ec2.amazonaws.com",

"eventName"=>"CreateKeyPair",

"awsRegion"=>"us-east-1",

"sourceIPAddress"=>"SOURCE_IP",

"userAgent"=>"console.ec2.amazonaws.com",

"requestParameters"=>{"keyName"=>"devops-1"},

"responseElements"=>

{"requestId"=>"408ea540-ba83-4564-abf7-d7aa99dd8d10",

"keyName"=>"devops-1",

"keyFingerprint"=>

"7f:b6:23:44:2b:e7:62:2e:4a:e2:70:d0:96:7f:06:f1:fd:38:60:81",

"keyMaterial"=>"<sensitiveDataRemoved>"},

"requestID"=>"408ea540-ba83-4564-abf7-d7aa99dd8d10",

"eventID"=>"d9bbcb98-9a9c-4ab8-9a14-4382e3f5d3fc",

"eventType"=>"AwsApiCall",

"recipientAccountId"=>"ACCOUNT_ID"}Next up

- Make the performed logic smarter. Probably going to do more work around the specifics of a user actions versus an admin action.

- Introducing more random data into the processing to see what comes up.

- Introduce cloudformation actions that could be interesting